Optimize Your AI Agent: 6 Proven Testing Approaches That Work

July 02, 2025

Ayush Kanodia

.jpg?w=1920&q=75)

AI agents are now part of how many businesses talk to their users. They answer questions, guide decisions, and help teams save time. But without proper testing, they can also respond in the wrong way, miss the context, or confuse the user. That can lead to frustration and break trust, especially when the system is used in customer-facing roles.

This blog is for teams and decision-makers who want to get more value out of their chatbots. If you’ve already built an AI agent or are planning to launch one, testing is what keeps it sharp and useful over time.

Let’s walk through 6 proven testing approaches that ensure your AI agents are reliable, safe, and effective.

Why Traditional QA Doesn’t Work for AI Agents?

Standard software testing relies on fixed inputs and expected outputs. If you give the same input, you expect the same result every time. That works well for traditional applications where logic is rule-based and predictable. But AI agents don’t work like that. They generate responses based on patterns, probabilities, and context. You can ask the same question twice and still get slightly different answers.

This is especially true for chatbots and other conversational AI systems. These agents aren’t just following scripts. They’re making real-time decisions on how to respond, what to prioritize, and how to handle unclear or incomplete inputs. Testing them requires checking how the agent behaves in a range of situations, from clear questions to confusing ones.

That’s why regular QA methods fall short. You need testing approaches that account for variation, edge cases, and real-world complexity. As more UAE businesses adopt conversational AI in areas like customer service, sales, and HR, there’s a growing need to test these systems in smarter, more flexible ways.

Thus, specialized testing methods are required, and we will walk through what they look like in practice.

6 Proven AI Agent Testing Best Practices

AI agent and chatbot testing is not limited to checking if it replies to a few sample prompts. It involves understanding how it performs across a variety of real-world inputs, how it adapts to conversations, and how it stays safe and useful over time. These six approaches are widely used across the industry and form the foundation of strong chatbot testing best practices.

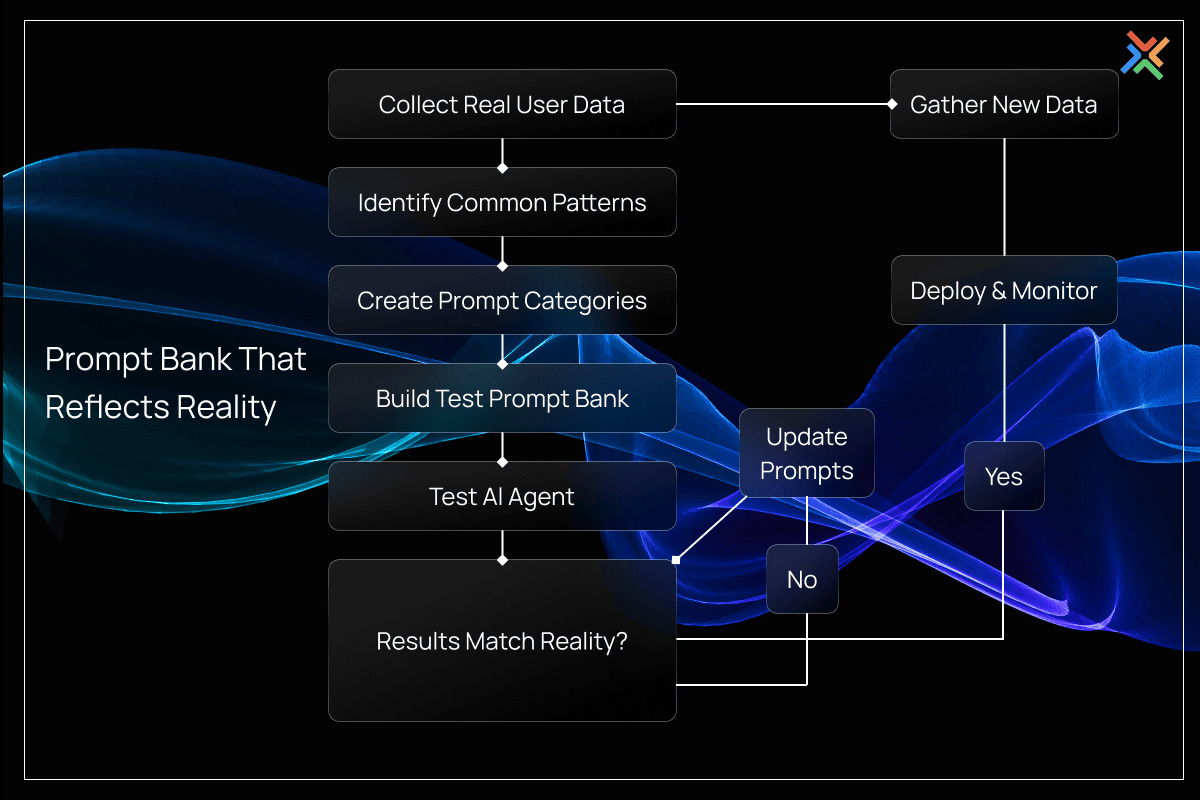

1. Build a Prompt Bank that Reflects Real User Inputs

Start with a wide collection of prompts that reflect how real users speak. This should include common questions, regional phrases, incomplete queries, and even spelling mistakes. A good prompt bank includes:

- FAQs that your support team already handles

- Informal messages with typos or abbreviations

- Domain-specific terms from your industry

- Local language variations for bilingual users

For UAE-based chatbots, this also means testing prompts in both English and Arabic, as many users switch between languages or expect bilingual support. You should also consider local expressions or terms common in UAE industries like real estate, travel, or finance. Testing across this variety helps catch gaps early and ensures your chatbot feels familiar and useful to the people it's built for.

2. Use Human-in-the-Loop Testing for Nuance

Some errors only humans can spot. This includes problems with tone, confusing responses, or missed context. Conversational AI testing often needs people to rate responses for quality. You can set up a simple review system where test prompts are answered by the bot and then scored by team members for:

- Accuracy

- Clarity

- Usefulness

- Appropriateness of tone

Add short notes to explain why a response worked or didn’t. These notes help developers fix issues without guessing. In many cases, especially when a chatbot handles sensitive topics like healthcare or banking, this kind of hands-on review is the only way to spot subtle mistakes that could cause confusion or risk.

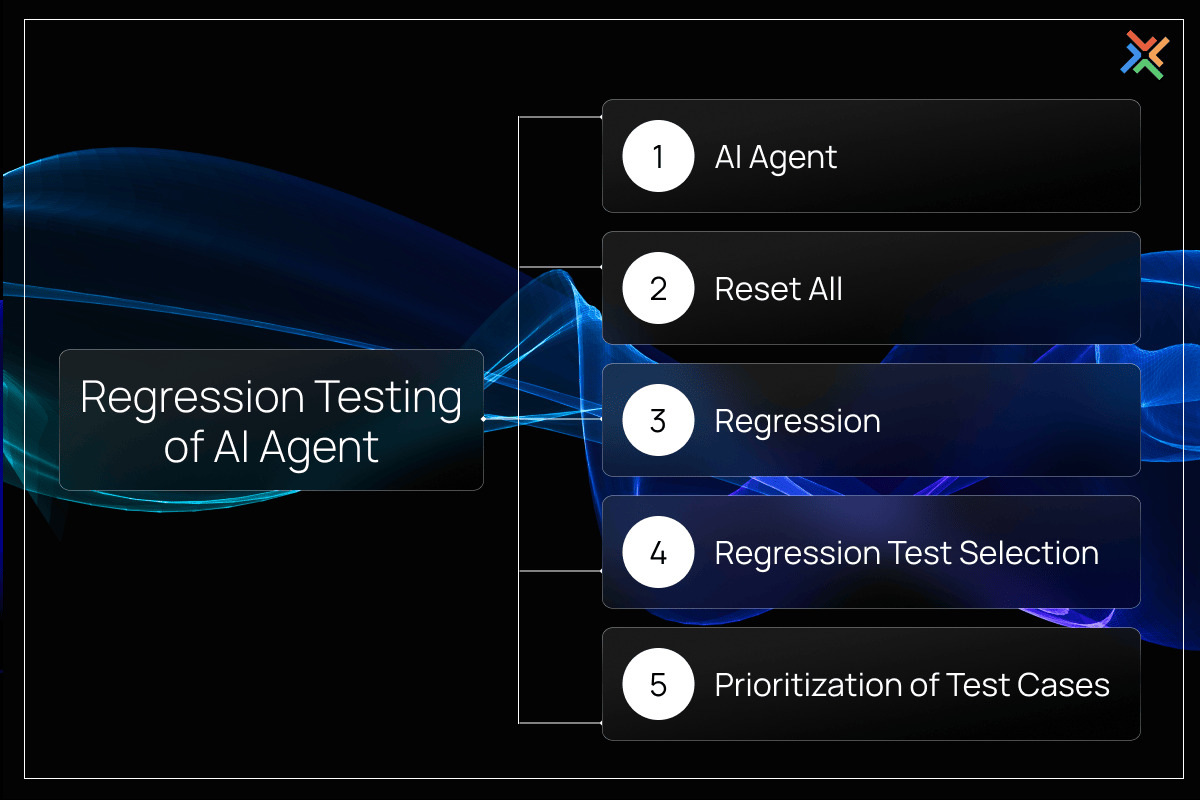

3. Set Up Regression Testing to Catch Drift

AI agents are not static. They change when you retrain them or add new features. Over time, this can cause them to forget how to answer something they handled well before. That’s why regression testing is so important.

To do this, lock in a core set of test prompts and save the ideal responses. Every time you make a change to the model or pipeline, rerun the same prompts and check if the new responses are still accurate. This can be automated using tools that compare semantic meaning rather than exact wording.

Many companies use in-house scripts for this, while others rely on chatbot QA platforms that support version testing. The goal is to catch sudden drops in performance before they reach end users.

4. Test for Context Retention in Multi-Turn Conversations

One of the key challenges in chatbot design is making sure the agent can hold a conversation over multiple turns. It should remember what the user said earlier, avoid repeating questions, and follow the thread of the dialogue.

Here’s what to test:

- Can it handle a follow-up question without losing context?

- Can it adapt when the user changes the topic midway?

- Does it recall important details like location or user preferences?

- Can it close the loop when a task is complete?

This is especially useful in business flows like booking appointments, handling support tickets, or guiding users through product selection. If your chatbot forgets what was said two messages ago, it breaks the experience. Testing tools that simulate full conversations rather than single prompts are helpful here.

5. Stress-Test Safety and Guardrails

Every chatbot needs clear boundaries. Users will sometimes ask strange, inappropriate, or even harmful things. Your chatbot should be prepared to respond safely or not respond at all.

Test your chatbot against:

- Inappropriate or offensive language

- Attempts to make the bot share private or sensitive data

- Inputs that try to trick it into bypassing filters

- Biased or unethical prompts

This type of AI testing is especially critical in UAE sectors like healthcare, banking, and public services, where bots handle sensitive data or regulated content. Building guardrails and testing them regularly helps protect your brand and ensures compliance with both legal and ethical standards.

6. Use Multi-Dimensional Metrics to Measure Real Performance

It’s not enough to know if the chatbot is giving answers. You need to measure whether those answers are helping users and meeting business goals. Relying on a single metric like accuracy won’t give the full picture.

Here are key chatbot testing metrics to track:

- Task completion rate – Did the bot help the user reach their goal?

- Fallback frequency – How often did it say “I don’t know”?

- Response time – Was the reply quick enough?

- Toxicity or bias score – Did the answer include anything risky?

- User ratings or thumbs up/down – What did users think of the answer?

These numbers show whether your chatbot is just functional or actually useful. They help teams optimize chatbot performance over time, not just during development. Good testing ends with better results, and metrics are how you track that progress.

Bonus - Tools and Techniques That Make AI Agent Testing Easier

Testing doesn’t always have to start from scratch. There are reliable tools and frameworks that can speed up the process, help you scale your testing efforts, and catch issues faster.

Some popular chatbot testing tools and platforms include:

- Botium – An open-source framework built specifically for chatbot testing. It supports automated test cases, regression testing, and integration with CI/CD pipelines.

- Rasa Test Stories – Useful if you're working with Rasa, this lets you create conversation flows and test them as scripted paths.

- Microsoft Bot Framework Test Emulator – A local tool that helps you test your bot in a controlled environment before going live.

- Postman or REST clients – While not built just for chatbots, these are helpful when your bot has APIs that need regular input/output testing.

- Custom scripts with Python or JavaScript – Many teams build lightweight in-house scripts to test common flows, especially when working with their own AI models.

Along with tools, testing becomes easier when the development approach is structured. Teams that follow modular design and keep prompts, intents, and response logic separated often find it easier to test and update their agents. Maintaining clean logs, user feedback histories, and test prompt archives also goes a long way.

That said, tools are only part of the solution. What really helps is having the right expertise. An experienced AI chatbot development company will not only choose the right stack but also bring proven processes, testing routines, and fallback strategies. They know what to expect, what to avoid, and how to prepare your bot for real users, not just internal demos.

For businesses with limited in-house AI resources, working with a partner who already builds and tests chatbots regularly can save both time and money. It helps make sure your chatbot is well-tested and ready for real-world use.

Why This Matters for UAE-Based Businesses

Businesses in the UAE are adopting AI faster than ever, especially in areas like customer support, banking, healthcare, and government services.

AI agents used in finance need to respond clearly without making compliance errors. In healthcare, even a small misunderstanding in language can create serious risks. Government chatbots often handle requests in both English and Arabic, which means the AI must be tested for language switching, tone, and cultural context.

On top of that, local regulations around data privacy and automation require systems to behave responsibly. This is where localization and well-planned testing matter most. You’re not just checking how the chatbot performs; you’re checking if it works in the UAE market, with UAE users, under UAE rules.

At WDCS Technology, we take this seriously. As a leading AI development company in the UAE, we build and test AI agents with these local challenges in mind. Our approach includes everything from bilingual prompt testing to compliance-based safety checks, making sure every chatbot is ready for real users before it ever goes live.

Don’t Launch Before You Test

Every AI agent will only perform as well as it’s tested. From prompt banks and regression testing to safety guardrails and multi-turn conversations, these chatbot testing best practices help prevent poor user experiences and costly mistakes.

Testing isn’t a one-time checklist. It’s something that should continue even after launch. As your users change, your bot should adapt. Regular testing helps you find gaps, improve responses, and keep things running smoothly.

Looking for help building and testing your AI agents? Reach out to our team at WDCS. We are your trusted chatbot development company in the UAE. We’ll make sure your AI systems are not only smart but also reliable, safe, and ready to work in the real world.

Deploy fault-tolerant AI agents using domain-specific prompt banks, regression testing, and policy behavior audits.

WDCS builds and tests intelligent agents with customized testing protocols, helping businesses in the UAE reduce chatbot failure points, improve task resolution rates, and meet user expectations across multiple languages and channels.